What We Learned from 5 Years of PBR Pipeline Battles

By Mira Kapoor | 7 November 2025 | 15 mins read

Table of contents

Table of Contents

We've all mastered the PBR basics. Metalness, Roughness, and Albedo are its second nature by now. But being an expert today feels less about knowing the rules and more about wrestling with the stubborn, real-world problems that come with a mature workflow. This isn't another PBR 101. We’re going to unpack the friction in modern texturing pipelines from the consistency conundrums to the technical limits and then look ahead at the AI, proceduralism, and universal standards set to redefine the next decade of rendering. Because keeping your pipeline efficient isn't just about solving today's bottlenecks; it's about preparing for tomorrow's tools without grinding production to a halt.

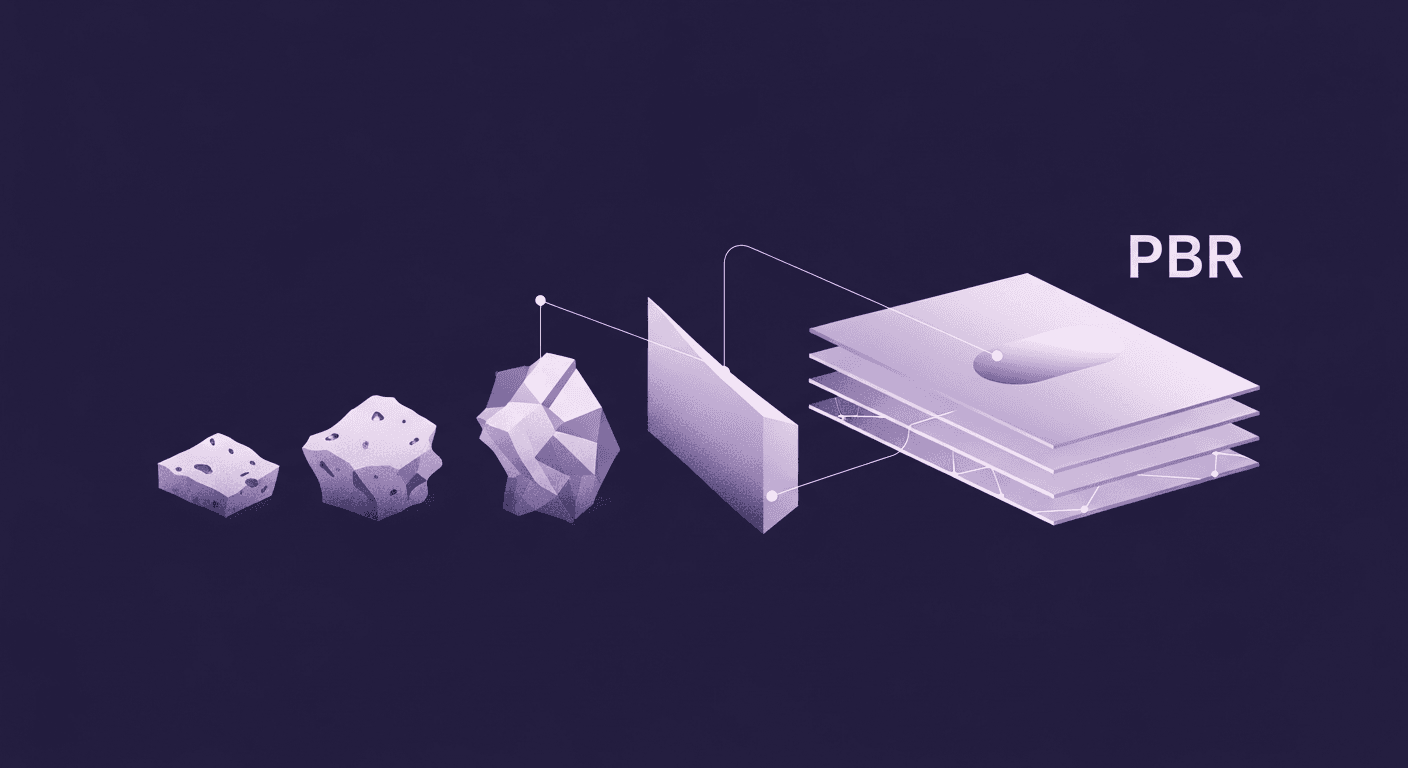

Why PBR textures are the industry standard

Most teams think of PBR as a set of rules for making assets look realistic. They’re right, just not in the way they think. Physically Based Rendering isn’t just an aesthetic upgrade; it’s a foundational shift in how we handle light and surfaces. It’s the reason an asset can move from a sunny outdoor scene to a dim, fluorescent-lit garage and still look correct without a team of artists manually tweaking it for every scenario. Predictability is the real game-changer here.

Unpacking the core principles: Beyond the buzzwords

At its heart, PBR textures work by simulating real-world material properties based on physics. Instead of artists faking reflections in a specular map, we now define how rough or metallic a surface is and let the engine calculate the lighting. This approach is grounded in three main concepts:

- Energy conservation: A surface can’t reflect more light than it receives. This simple rule prevents materials from looking unnaturally bright and grounds them in physical reality. When light hits a surface, it’s either reflected (specular) or refracted and absorbed/scattered (diffuse). The balance between these two is what PBR engines manage automatically.

- Fresnel effect: The angle at which you view a surface affects its reflectivity. Think of a wooden floor; looking straight down, you see the wood’s color and grain. But from a sharp angle, it reflects the environment almost like a mirror. PBR shaders handle this inherently, adding a subtle but crucial layer of realism.

- Microfacet theory: Every surface, no matter how smooth it appears, is bumpy at a microscopic level. PBR simulates these tiny microfacets. A rough surface has chaotically angled microfacets that scatter light, creating a matte look. A smooth surface has aligned microfacets that reflect light uniformly, creating sharp highlights.

This physics-first approach leads us to the two dominant PBR Textures workflows you see everywhere: Metalness/Roughness and Specular/Glossiness. They’re two different languages used to describe the same thing. The Metal/Rough workflow, popular in engines like Unreal and Unity, is more intuitive. You use a grayscale map to define which parts of a material are metallic (white) and which are non-metallic or dielectric (black). The Specular/Glossiness workflow, common in renderers like V-Ray, gives you more granular control by using a color map for specular reflections, but it also opens the door for artists to create physically inaccurate materials if they’re not careful. Neither is universally better; the right choice depends on your team’s tools and the level of control you need.

Regardless of the workflow, the key texture maps do the heavy lifting:

- Albedo/Base color: This defines the pure color of a surface, stripped of any lighting or shadow information. For metals, it stores their reflectance values; for dielectrics, it's just their color.

- Normal: This map creates the illusion of high-resolution surface detail (like pores, scratches, or bumps) on a low-poly model by manipulating how light reflects off the surface.

- Roughness/Glossiness: The star of the show. This grayscale map controls how light scatters across the surface. In a Roughness map, black is perfectly smooth (a mirror), and white is completely matte (chalk). A Glossiness map is simply the inverse.

- Metallic: Used in the Metal/Roughness workflow, this map tells the shader if a surface is a metal or not. It’s almost always pure black or white, with gray values reserved for transitional areas like dirt on metal.

- Ambient Occlusion (AO): While not strictly a core PBR channel, AO is often included to add contact shadows in crevices where ambient light would be blocked, adding depth and grounding the object in its environment.

Understanding these maps isn't just about knowing their definitions. It's about seeing them as a system for describing any material in the known universe, which is precisely why PBR became the unbreakable standard for modern 3D rendering technology.

The grind: Common challenges in modern PBR workflows

Adopting PBR was supposed to simplify things. And in many ways, it did. But as pipelines have grown and teams have scaled, a new set of stubborn challenges has emerged. Managing a consistent visual standard across hundreds of assets and a dozen artists is where the real work begins.

The consistency conundrum: Keeping your art pipeline aligned

Here’s a scenario we’ve all seen: a beautifully authored asset looks perfect in Substance Painter, but when it’s dropped into the game engine, it looks washed out, overly glossy, or just… wrong. This is the consistency conundrum in a nutshell. The problem often lies in the subtle differences between how various tools and renderers interpret PBR values. A roughness value of 0.5 in one program might not look identical in another.

This leads to a few key pain points for leads:

- Managing texture libraries and standards: When every artist has their own interpretation of what “moderately rough” means, your material library quickly becomes a mess. Establishing a clear set of standards, like a calibrated lighting scene for look development and a master material library with approved base materials, is non-negotiable. Without it, you spend more time fixing assets than creating them.

- The challenge of environment lighting: PBR materials are chameleons; they look completely different depending on the light they’re in. An asset authored in a neutral lighting studio might fail spectacularly in a moody, color-rich environment. This is why it’s critical to test assets under multiple lighting conditions early and often. Your standard look-dev scene should include a range of HDRI maps that represent the key lighting scenarios in your project (day, night, interior, exterior).

This directly addresses the question of how PBR textures improve 3D rendering realism when standards vary. They don't. The realism only comes from strict adherence to a consistent, well-defined standard. PBR provides the framework, but it's the pipeline that provides the discipline. When standards vary, you lose the primary benefit of predictability, and artists fall back into the old habit of tweaking materials to look good in just one specific context.

Technical hurdles in creating advanced PBR textures

Beyond consistency, there are fundamental technical limits we’re always pushing against. Authoring simple materials like plastic or plain steel is straightforward, but the world is filled with complex surfaces that challenge our current Physically Based Rendering techniques.

Here’s where artists hit a wall:

- Crafting complex materials: Think about anisotropic surfaces like brushed aluminum, where highlights stretch in a specific direction. Or layered materials like a clear coat on a car, a layer of dust on varnished wood, or iridescent fabrics that change color based on viewing angle. These require more than the standard set of PBR maps. They often demand custom shaders, extra texture maps (like anisotropy direction or clear coat thickness), and a much deeper technical understanding, which creates a skills gap in many teams.

- Balancing high-fidelity textures with performance budgets: We all want 4K textures on every asset. But performance is a zero-sum game, especially in real-time applications. The constant battle is deciding where to spend your texture budget. Do you use a unique 2K map for a hero prop or a 512px tileable texture with some clever vertex painting for a large wall? This balance of art and optimization is one of the biggest challenges in creating advanced PBR textures.

- The unending battle with UVs and texel density: No one wants to wrestle with UVs at 2 a.m. Yet, they remain one of the most significant technical hurdles. Ensuring consistent texel density across a scene is critical for making sure textures hold up at different distances. A character’s face can’t have ten times the texture resolution of their hands. Tools like UDIMs have helped, but the underlying challenge of efficiently packing and unwrapping complex meshes remains a time-consuming and often frustrating part of the workflow.

These hurdles are a daily reality. The next frontier isn't just about creating more realistic materials; it's about making the process of creating and managing them smarter and more scalable.

The horizon: Future trends in physically based rendering

If the last decade was about standardizing PBR, the next is about making it smarter, faster, and more integrated. The line between real-time and offline rendering is blurring, and the manual grunt work that defines much of material authoring is on the verge of a major overhaul. The future trends in physically based rendering are less about new maps and more about entirely new ways of working.

Emerging technologies in texture rendering

The most exciting shifts are happening at the intersection of AI, proceduralism, and rendering hardware. These aren't far-off dreams; they're emerging technologies in texture rendering that are already reshaping pipelines.

- AI and machine learning: This is more than just a buzzword. AI is becoming a powerful assistant for texture artists. Think of tools that can generate a full set of PBR maps from a single photograph, removing highlights and shadows automatically. Or AI-powered upscaling (like NVIDIA's DLSS) that allows you to use lower-resolution textures in-game while rendering them out at crisp, high fidelity. We’re also seeing generative models that can create entire libraries of stylistically consistent, tileable materials from simple text prompts. The goal here isn’t to replace artists, but to automate the 80% of texturing work that is repetitive and time-consuming.

- The rise of proceduralism: Tools like Substance Designer and Houdini have championed a procedural-first mindset for years, but it's finally becoming the industry norm. Instead of painting a static texture, you build a system of nodes that generates the texture. This is a monumental shift. Need a thousand unique wood plank variations? You don't make them by hand; you just change the random seed in your graph. This approach gives you infinite resolution, non-destructive editing, and unparalleled control. It turns material creation from a painting task into a design task.

- Real-time ray tracing: This is the big one. For years, real-time engines have used clever hacks (like screen-space reflections and baked lightmaps) to simulate complex lighting. Real-time ray tracing changes the game by simulating the actual path of light bounces. Its impact on material authoring is profound. Subtle effects that artists used to fake in their textures, like soft reflections on a semi-rough surface or color bleeding from a nearby object, now emerge naturally from the light simulation. This means artists can focus more on the underlying physical properties of a material and less on faking the way it interacts with light.

Evolving computer graphics workflows and tools

As the technology evolves, so do the computer graphics workflows that bind them together. The focus is shifting from tool-specific pipelines to more universal, data-driven systems.

- The shift toward universal material standards: One of the biggest bottlenecks in any production is moving assets between different software. A material built in Unreal doesn't just work in Blender out of the box. This is the problem that standards like MaterialX, developed by Industrial Light & Magic, are built to solve. MaterialX provides a common, open-source way to describe complete material textures, nodes, and all that can be read by any compliant renderer or game engine. This is the key to a truly portable asset pipeline, where you create a material once and deploy it everywhere.

- How VR/AR is pushing realistic texture mapping: Virtual and augmented reality applications have an insatiable appetite for realism and performance. Because the user is so immersed, any imperfection in a material is instantly noticeable. This is pushing the boundaries of realistic texture mapping. There’s a huge demand for textures that hold up under extreme close-up inspection and rendering engines that can deliver high-fidelity materials at 90+ frames per second. This pressure is accelerating the adoption of techniques like dynamic texture streaming and foveated rendering.

- The integration of scan-based data: Photogrammetry and material scanning are no longer niche techniques. Capturing real-world objects and surfaces provides an unparalleled foundation of realism. The challenge is no longer in the capture, but in the integration. Raw scan data is often messy, unoptimized, and difficult to work with. The evolving workflows are focused on tools that can automatically clean, retopologize, and generate PBR-ready textures from this data, turning a noisy point cloud into a production-ready asset with minimal manual intervention.

Future-proofing your pipeline: Integrating advanced rendering methods

Keeping up with this pace of change feels like a full-time job. The real challenge isn’t just adopting a new tool; it’s evolving your entire pipeline and team mindset without grinding production to a halt. You need a strategy that’s flexible, scalable, and built for the long haul. It's time to move from a reactive stance, bolting on new tech as it comes, to a proactive one.

Strategies for a streamlined and efficient art pipeline

Building a future-proof pipeline is about creating a stable core that can accommodate new advanced rendering methods as they mature. It’s less about picking the perfect software today and more about building a flexible system for tomorrow.

Here’s a practical framework:

- Build a modular material library: Don’t think in terms of individual assets; think in terms of base materials. Create a core library of highly-controlled, procedural base materials (like steel, concrete, plastic, wood) in a tool like Substance Designer. Artists can then pull from this library to texture specific assets, layering details like dirt, wear, and scratches on top. This ensures consistency and makes updating assets a breeze. If you need to change the base steel properties, you change it once in the master graph, and it propagates to every asset using it.

- Prioritize documentation and training: A tool or a standard is only as good as its documentation. Your PBR guidelines shouldn't be a dusty document on a shared drive. It should be a living resource with visual examples, technical specs, and clear best practices. When you introduce a new technique, hold workshops. Create tutorials. The goal is to make sure every artist, from senior to junior, is speaking the same visual and technical language.

- Evaluate and integrate new 3D rendering technology carefully: Chasing every new tool is a recipe for a fragmented pipeline. Set up a dedicated design sandbox, separate from your main production environment. When a new technology like MaterialX or an AI texturing tool emerges, test it there. Give a small team a specific, low-risk project to vet the tech. Ask the hard questions: How does this fit into our current workflow? What’s the training overhead? Does it solve a problem we actually have? Only integrate it into the main pipeline once it’s proven its value and you have a clear implementation plan.

Shifting from reactive to proactive: preparing for what’s next

Ultimately, future-proofing is a cultural challenge, not just a technical one. It’s about building a team that is curious, adaptable, and always looking ahead. The teams that thrive will be the ones that treat research and development as a core competency, not an afterthought.

- Foster a culture of R&D: Encourage your artists to spend a small percentage of their time, even just a few hours a week, experimenting with new tools and techniques. Host internal talks where team members can share what they’ve learned. This creates a bottom-up flow of innovation and keeps the team’s skills sharp. It turns R&D from a top-down mandate into a shared, collaborative effort.

- Identify critical skills for the future: The role of the technical artist is evolving faster than ever. The skills that will be most valuable in the next five years are not just about artistic talent. They are about systems thinking. Look for and cultivate these skills in your team: The role of the technical artist is evolving faster than ever. The skills that will be most valuable in the next five years are not just about artistic talent. They are about systems thinking. Look for and cultivate these skills in your team:

- Procedural generation: Deep proficiency in node-based tools like Substance Designer and Houdini is becoming essential.

- Shader authoring: Understanding the logic of shaders, whether in a node graph like Unreal’s Material Editor or in code (HLSL/GLSL), is a massive advantage.

- Scripting and automation: Knowledge of Python to write scripts that automate repetitive tasks (like batch processing textures or managing files) is a huge force multiplier for any pipeline.

- Data management: As assets become more complex, understanding how to manage and version data effectively is no longer just a producer’s job.

By investing in these strategies and skills, you’re not just preparing for the next big rendering technique. You’re building a resilient, adaptable art pipeline that can handle whatever comes next.

From painter to architect: The real job is the pipeline

We’ve covered a lot of ground from the daily grind of managing texture consistency to the not-so-distant future of AI-driven material creation. But the biggest takeaway isn’t a list of new tools to watch. It’s that the job itself is shifting.

For the last decade, success was about mastering the craft of PBR textures. For the next, it’s about mastering the systems that produce them. The constant push for more realism, the need for consistency across massive teams, and the rise of proceduralism all point to the same conclusion: your most valuable product isn't a single, perfect asset. It's the resilient, modular pipeline that can generate thousands of them consistently.

This isn't about technology replacing artists. It's about automating the repetitive 80% of the work so your team can focus on the creative 20% that truly matters. A well-designed workflow, one built for flexibility, not just fidelity, is what gives you that creative freedom back. The tools will keep evolving, but that principle is here to stay. Your pipeline is the platform for your art. Go build it.

Mira Kapoor

Mira leads marketing at Texturly, combining creative intuition with data-savvy strategy. With a background in design and a decade of experience shaping stories for creative tech brands, Mira brings the perfect blend of strategy and soul to every campaign. She believes great marketing isn’t about selling—it’s about sparking curiosity and building community.

Latest Blogs

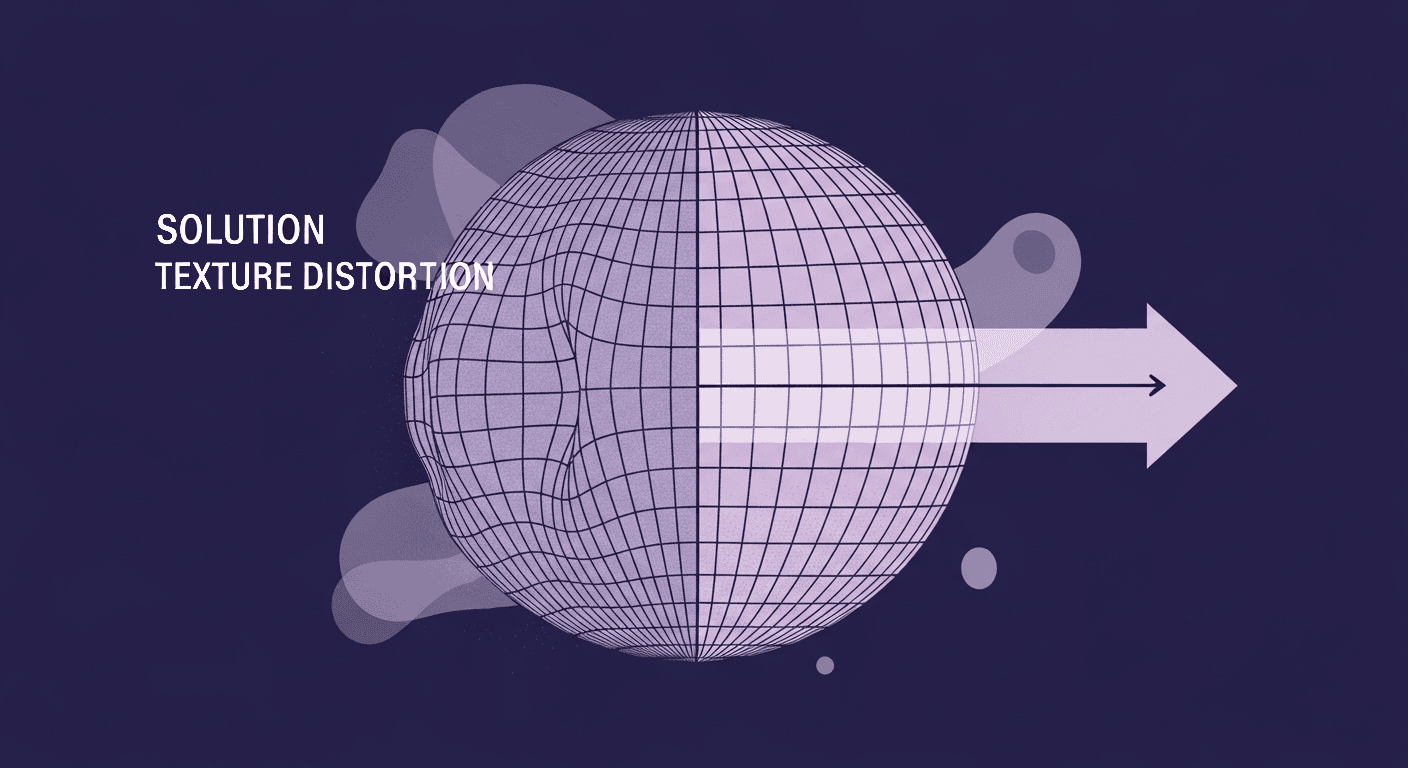

The Polar Pinch Problem: How VR Artists Solve Texture Distortion

Texture creation

PBR mapping

Max Calder

Dec 24, 2025

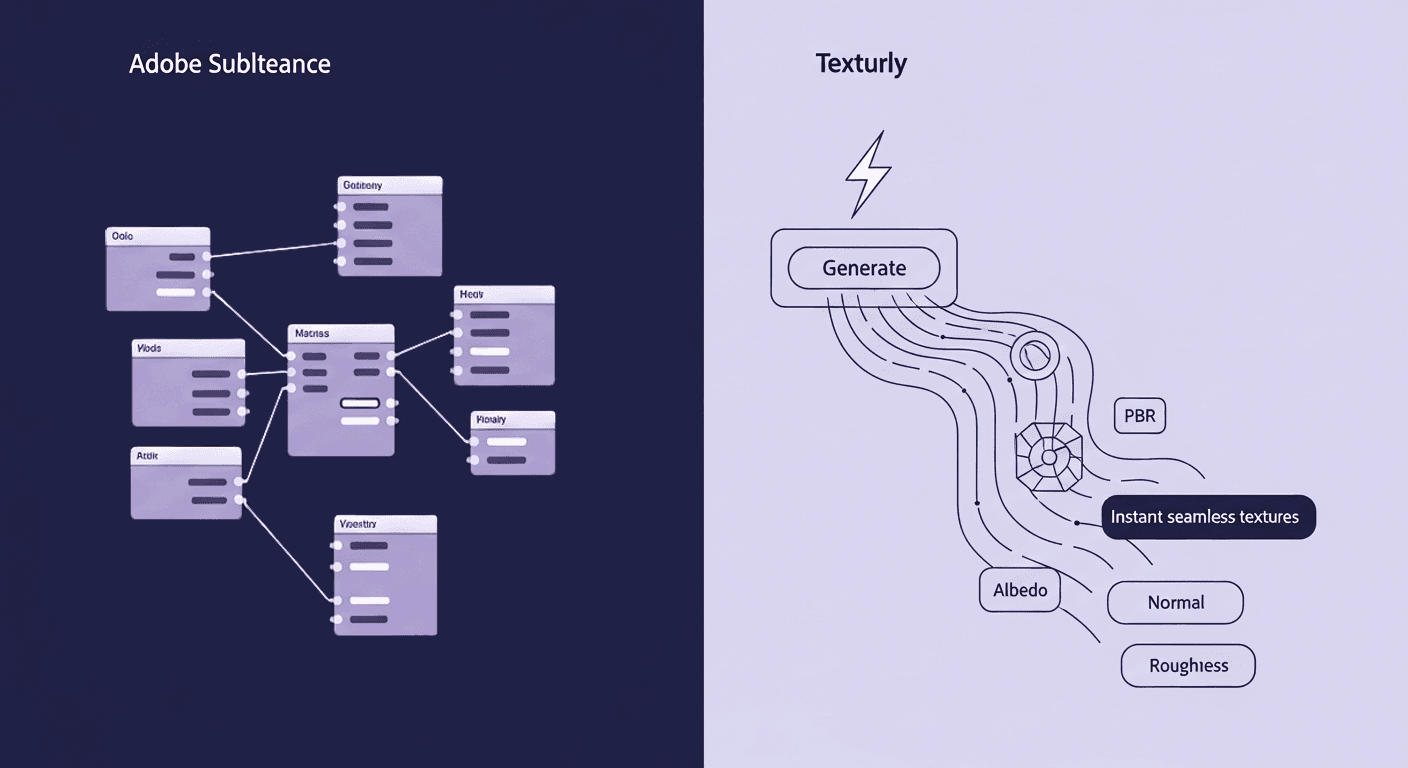

When to Ditch Nodes for AI: A Texturly vs. Adobe Substance Workfl...

AI in 3D design

Texture creation

Mira Kapoor

Dec 22, 2025

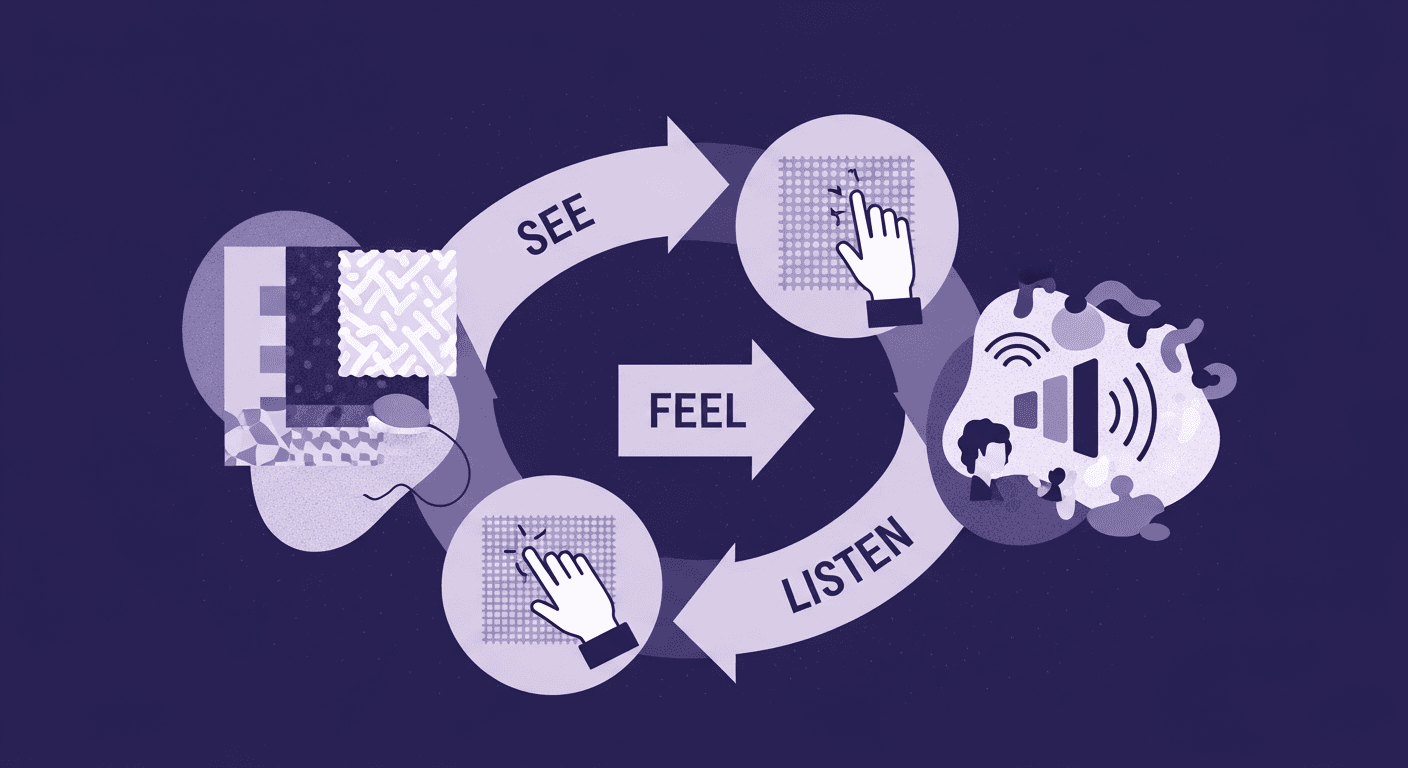

The See-Feel-Listen Method That Decodes Any Fabric Texture

Fabric textures

Texture creation

Max Calder

Dec 19, 2025