Solving AI Texture Generation: Breaking Down Challenges & Innovations

By Max Calder | 4 July 2025 | 7 mins read

Table of contents

Table of Contents

You’ve felt that flicker of excitement and then the familiar wave of frustration. You ask an AI for a “weathered stone wall,” and it gives you something that looks technically correct but feels completely soulless, like a stock photo with no story. So, what gives?

For artists and designers, the real-world impacts of AI texture generation limitations are now a familiar story, from the "almost right, but not quite" quality problem to AI's struggle with artistic context, and the constant battle for creative control. You've experienced the data dilemmas under the hood, the material properties gap, and the "one-size-fits-all" model that simply doesn't get your specific vibe.

But here's the crucial shift: we're moving beyond just identifying these hurdles. This post cuts through the hype to get to the heart of the matter, exploring the genuine breakthroughs that are finally making these tools reliable creative partners, and how you can actively solve for their current constraints in your own pipeline. Because these tools shouldn't just be a novelty; they should be a powerful assistant that understands your vision and fits into your workflow.

The breakthroughs: How we're improving AI texture generation accuracy

It’s easy to focus on the frustrations, but the good news is that these problems are being actively solved. The technology is moving incredibly fast, and the breakthroughs we’re seeing are directly addressing the core challenges of quality, control, and material properties. This is where we shift from complaining about the problems to getting excited about the solutions.

Smarter models built on better data

The garbage-in, garbage-out problem is being tackled head-on. The industry is realizing that the secret to better AI isn't just more data, it's better data. This means a shift from scraping the web to building curated, high-quality datasets specifically for machine learning texture generation.

These datasets are composed of high-resolution, professionally shot photos with consistent lighting. More importantly, they often include full PBR material scans, not just color information. When a model is trained on this kind of data, it learns the language of materials, not just images. It learns the relationship between a rust pattern and its corresponding roughness value. This leads to far more realistic, reliable, and physically accurate results right out of the box.

Giving you the steering wheel: Innovations in user control

The days of being at the mercy of a single text prompt are ending. New techniques are emerging that give the artist back the control they need for a professional workflow. This is about moving from a vending machine model to a true co-creation tool.

Tools like ControlNets, for example, let you guide the AI's composition using inputs like a depth map, a normal map, or even a simple sketch. You can literally draw the outlines of where you want cracks in a stone wall to appear, and the AI will fill in the details. Image-to-image generation lets you provide a rough painting or a reference photo as a starting point, giving the AI a much stronger sense of your intended art direction.

Think of it as the ultimate creative assistant. You provide the vision and the structure; the AI handles the laborious rendering of details. This hybrid approach is improving AI texture generation accuracy because it combines human artistic intent with the raw power of the algorithm.

Beyond the single image: Generating full PBR material maps

This is the breakthrough that directly solves the material properties gap. The most advanced texture-focused AI platforms are no longer just generating a single-color image. They’re generating a complete set of PBR maps: albedo, roughness, metalness, normal, and ambient occlusion, all at once.

Because these models are trained on full material scans, they understand the physical relationship between the maps. They know that a metallic scratch in a painted surface should have a low roughness value and a high metalness value in the corresponding maps. This solves one of the biggest technical constraints in AI texture rendering. You get a full, ready-to-use material that will respond correctly to light in any modern game engine or renderer.

This is a massive leap forward. It transforms the AI from a simple image-maker into a true material creator, saving hours of manual work and finally making AI-generated textures a viable option for professional 3D pipelines.

Your workflow, upgraded: Making AI work for you today

Breakthroughs are exciting, but you have deadlines to meet now. So, how can you use today’s imperfect AI tools to your advantage without letting them derail your workflow? The key is a mindset shift: stop trying to make the AI do the entire job. Instead, use it as a powerful assistant for specific tasks.

Here’s how to make it work in a real production pipeline.

Use AI for ideation, not just final renders

The biggest strength of AI right now is its speed. It can generate variations on an idea faster than any human. Don't ask it for a final, pixel-perfect texture. Instead, use it for mood boarding and creative exploration at the beginning of a task.

Let’s say you need to texture a sci-fi crate. Instead of spending an hour meticulously painting one detailed concept, spend five minutes with an AI generator. Prompt it for “worn metallic panel with alien glyphs”, “scratched industrial plastic casing”, and “bio-mechanical armor plating”. You’ll get ten rough ideas in a fraction of the time. Most will be unusable, but one or two might have a unique pattern or an interesting color combination that sparks your own creativity. It’s a tool for brainstorming, not for replacing the final, handcrafted work.

Layering and post-processing are your best friends

Never take an AI-generated texture at face value. Treat it as a raw ingredient, not the finished meal. The most effective workflow is to use AI output as a base layer in the tools you already know and love, like Photoshop, Mari, or Substance Painter.

Generate a texture that has some interesting noise or a complex pattern you like. Then, bring that image into your painting software. Layer it with hand-painted details, procedural noises, and custom grunge maps. Blend it, mask it, and paint over it. This hybrid approach gives you the best of both worlds: the speed and novelty of AI generation, combined with the artistic control and precision of your own hand. You’re not letting the AI dictate the final look; you’re using it to save time on the foundational layers.

Master the negative prompt to weed out bad results

This is one of the most practical, powerful tips for getting better results from any generator. Most tools have a “negative prompt” field, and it’s your best friend for fighting back against common AI texture generation problems. Use it to tell the AI what you don’t want to see.

Are you constantly getting blurry, low-quality results? Add --no blurry, low resolution, jpg artifact to your prompt. Tired of seeing ugly, obvious seams in your tileable textures? Add --no seams, tiling errors, obvious repeat. A good negative prompt acts as a quality filter, steering the AI away from its worst habits.

Start a text file where you keep your go-to negative prompts. A typical one for texture work might look something like this:

--no blurry, soft focus, ugly, tiling errors, seams, repetitive, symmetrical, smooth, plastic, watermark, text

By being explicit about what to avoid, you dramatically increase the chances of getting a usable result that you can then refine in your main workflow. It's a simple step, but it puts a surprising amount of control back in your hands.

Integrating AI: Streamlining your production pipeline

Beyond individual texture generation, the true power of AI for solving workflow challenges lies in its ability to integrate and automate. This isn't just about crafting a single texture; it's about optimizing your entire asset creation pipeline for speed and consistency.

Think about batch processing: AI can rapidly upscale entire libraries of existing textures, generate basic roughness or normal maps from a large collection of albedos, or even categorize and tag your massive asset libraries for quicker access. Instead of manual, tedious tasks for every single asset, AI can handle the grunt work in moments.

Furthermore, many studios are now building custom tools or integrating AI models via APIs directly into their Digital Content Creation (DCC) software, like Maya, Blender, or Unreal Engine. This allows artists to trigger AI generation from within their familiar environment, automating repetitive tasks like UV packing textures, generating proxy meshes with quick textures, or even smart re-topologizing based on AI analysis. This deep integration means AI becomes an invisible accelerator, not a separate, clunky tool.

By automating these low-level, high-volume tasks, AI frees up valuable artist time. You move from spending hours on repetitive groundwork to focusing on the high-value, creative decisions that genuinely define the look and feel of your projects. It’s a shift from being a manual laborer to becoming a strategic director of your creative pipeline.

Your new creative co-pilot

So after all the glitches, the breakthroughs, and the workflow hacks, what’s the big takeaway? It’s easy to get stuck thinking about AI as this all-or-nothing technology that’s either going to take your job or solve every problem. The reality is far more practical and a lot more interesting.

Think of these tools less like an automated artist and more like the smartest, fastest procedural generator you’ve ever had. You’ve already adapted your workflow countless times, from hand-painting every detail to mastering procedural nodes in Substance or Mari. This is just the next evolution. The real skill, the thing that will separate the pros, is learning how to direct it, layer its output, and fold it into the craft you’ve already perfected.

Because every minute you save not having to create a generic concrete base from scratch is a minute you can spend perfecting the storytelling, the subtle water stains running down the wall, the exact shade of moss growing in the cracks. The future of texture artistry isn't about being replaced by a machine. It's about being amplified by one. It’s about letting your new co-pilot handle the tedious parts, so you can focus on what no algorithm can ever replicate: your vision, your context, and your art.

Max Calder

Max Calder is a creative technologist at Texturly. He specializes in material workflows, lighting, and rendering, but what drives him is enhancing creative workflows using technology. Whether he's writing about shader logic or exploring the art behind great textures, Max brings a thoughtful, hands-on perspective shaped by years in the industry. His favorite kind of learning? Collaborative, curious, and always rooted in real-world projects.

Latest Blogs

Beyond the Spec Sheet: A Tactile Guide to Plastic Texture Compari...

Product rendering

Texture creation

Max Calder

Dec 15, 2025

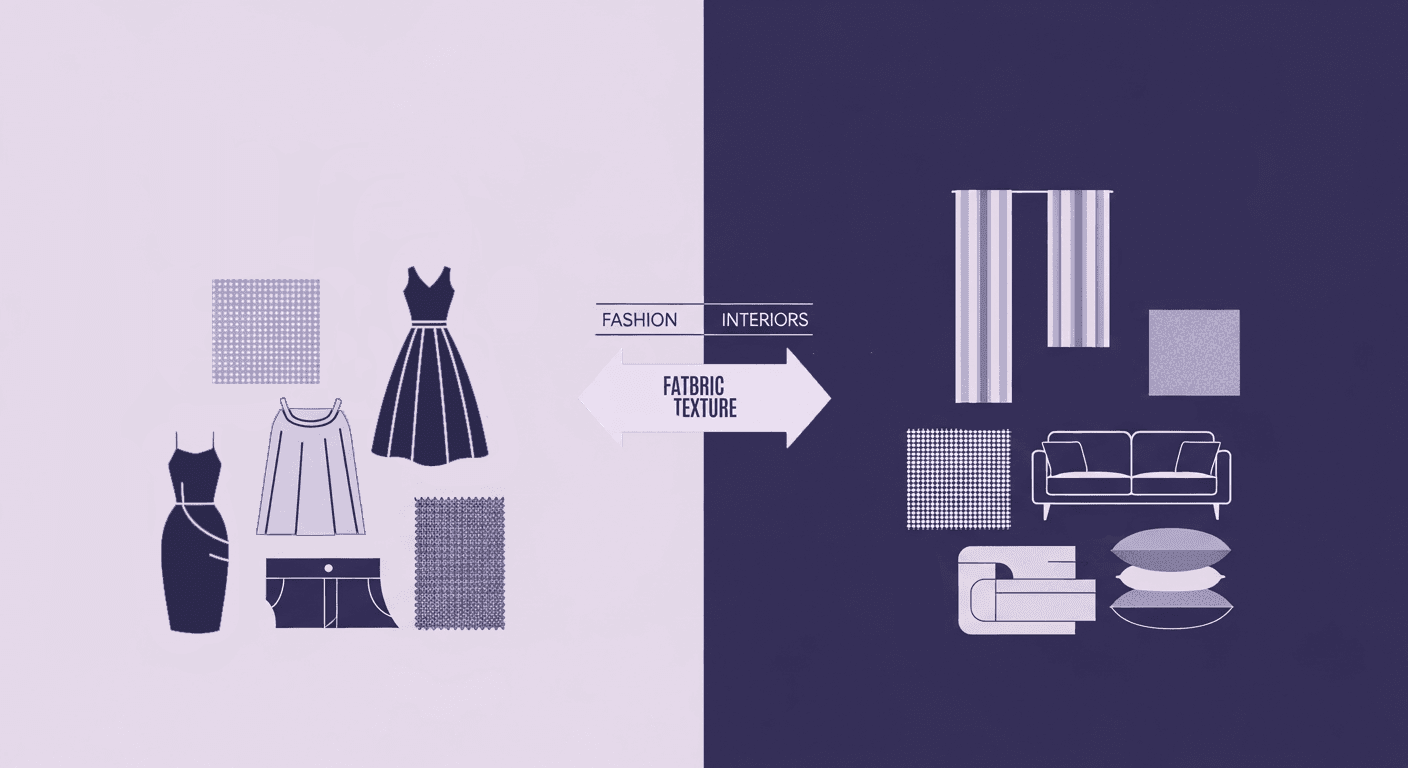

How Fabric Texture Shapes Design Strategy in Fashion and Interior...

Fabric textures

3D textures

Mira Kapoor

Dec 10, 2025

DIY Textile Texture Techniques That Make Digital Designs Come Ali...

Fabric textures

Texture creation

Max Calder

Dec 8, 2025