Navigating the Challenges of AI Texture Generators: Understanding Limits & Potential

By Max Calder | 1 July 2025 | 11 mins read

Table of contents

Table of Contents

You've probably felt the pull of AI texture generators. They promise a future where complex textures emerge at the click of a button, revolutionizing your workflow. But then comes reality: AI often misses the mark when it matters most, unable to grasp those intricate contextual details that even a fresh intern can spot. This article dives into the core AI texture generator limitations, guiding you through the nuanced challenges and untapped potential waiting to be understood and explored. By the end, you'll have the insights to judge if AI texture tools should earn a spot in your art pipeline or if they're merely adding noise.

It's not about rejecting AI textures outright but understanding the almost-but-not-quite factor that often makes them unsuitable for production. These tools can create visually exciting patterns, yet they fall short when it's time to transform images into reliable assets that blend seamlessly into your PBR standards. You'll explore why AI can't quite grasp the contextual understanding needed for realistic textures and how you can harness these tools to complement your artistry, not replace it. So, let's cut through the hype and see what makes AI a robust assistant rather than a standalone solution in your creative arsenal.

The “Almost-but-not-quite” problem with AI textures

You’ve seen the demos for AI texture generators, prompted for "grimy cyberpunk alley floor," and gotten something that was… 90% there. It’s that last 10% that costs you.

That’s the core of the AI texture generator limitations: they are incredible at creating images but often stumble when asked to create functional, production-ready assets. Think of it like a brilliant concept artist who can’t rig a model. The vision is there, but the technical execution needs a human touch.

When the magic fails: Quality and consistency issues

We’ve all been there. You get a texture that looks stunning in the little preview window. But when you apply it to a model, the cracks appear literally.

You’ll spot common visual artifacts like distorted patterns, melted-looking details, or a strange, plastic-like sheen on everything. The AI is trained on photos, so it often bakes in lighting and reflections, which is not correct for PBR workflows. The result? A texture that fights your game engine’s lighting instead of working with it.

Then there’s the tiling. True seamlessness is about more than just matching edges. It's about avoiding repeating patterns that the human eye is ruthlessly good at spotting. AI often creates soft seams where the edges blend, but it struggles to produce patterns that feel naturally random across a large surface. You end up with noticeable repetition, pulling the player right out of the experience.

These generative AI texture problems aren't just minor annoyances; they're pipeline blockers.

The struggle with specifics: Customization and control constraints

This is where the frustration really sets in. You have a specific vision of weathered oak planks, but with less moss and a deeper grain. You tweak your prompt: "detailed weathered oak planks, deep grain, dry, no moss."

The AI returns… slightly different weathered oak, now with some weird splotches and maybe more moss.

Here’s why: you’re not giving instructions to an artist. You’re providing input to a complex neural network. The limitations of machine learning in texture generation mean that iterating on a design isn’t a conversation; it’s a roll of the dice. The AI doesn’t understand deeper grain as a physical concept. It just knows which pixels are statistically associated with those words in its training data. Getting the exact texture you need feels less like directing and more like searching, and that’s a huge drain on creative momentum.

This black box approach is the opposite of the predictable, controllable pipeline you’ve worked so hard to build. And that brings us to the core technical hurdles.

Unpacking the “Why”: Core AI texture generation challenges

So, why does the AI stumble? It's not just about refining the algorithms. The AI texture generation challenges run deeper, stemming from a fundamental disconnect between what a machine sees and what an artist understands. It's a gap between pixels and purpose.

Lost in translation: Can AI understand contextual texture requirements?

In short, no. Not in the way a human artist does. An AI model can generate a beautiful wood texture, but it lacks the contextual intelligence to apply it correctly.

Ask it for a wood texture, and you might get something perfect for a flat tabletop. But apply that same texture to a sphere, and the illusion shatters. The grain won't wrap believably because the AI doesn't understand that the texture represents a material that was once a tree, with growth rings and a directional core. On a plank, the grain should be linear. On a carved post, it should follow the form.

The AI is just mapping a 2D pattern. It misses the mark on:

- Scale: Generating cobblestones that are the size of basketballs.

- Shape: Creating fabric weaves that don't stretch or compress correctly around a character's arm.

- Function: Producing a metal texture with wear and tear in places that would never see friction.

An artist knows a sword handle is worn where the hand grips it. An AI just adds "worn" artifacts wherever its data suggests they look cool. This is the hidden insight: the biggest limitation is the AI's inability to connect a texture to an object's history and function.

The realism gap: What technical limitations prevent realistic texture creation?

Beyond context, there are hard technical limits. Artists don't just paint colors; they build materials with physical properties. This is where AI often generates a pretty picture that falls apart under the scrutiny of a PBR engine.

The main challenge is replicating complex material physics. Think about materials that do more than just reflect light:

- Subsurface scattering (SSS): The way light penetrates the surface of materials like skin, marble, or jade. AI can replicate the look of SSS in a static image, but it can't generate an accurate SSS map that responds correctly to dynamic in-game lighting.

- Iridescence and anisotropy: The shifting colors on a beetle's shell or the stretched highlights on brushed metal. These effects are born from micro-surface structures that are incredibly difficult for current models to deconstruct and output as functional PBR maps.

And how do AI texture generators handle complex surface details like rust and wear? They mimic them aesthetically. The AI can generate a fantastic-looking rust patch. But it won't generate a mask that logically places rust in crevices where water would pool or scuff marks along exposed edges. It gives you the what (rust) without the why (water damage), which is precisely what procedural tools and manual painting are for.

The resource drain: Computational texture synthesis demands

Finally, there’s the brute-force reality of it all. Generating high-resolution, detailed textures is incredibly demanding. This is computational texture synthesis at its most expensive.

Want a quick 1K texture? Most models can generate that in seconds. But what about a set of production-ready 4K or 8K maps with high bit depth? The time and processing power required scale exponentially. This creates a bottleneck. Do you sacrifice quality for speed, or wait minutes for a single iteration that might not even be what you wanted?

When managing a pipeline, this becomes a critical calculation. The time spent generating, reviewing, and regenerating textures can quickly outweigh the initial speed advantage, especially when the output requires significant cleanup anyway.

Making it work: Integrating AI textures into a real-world pipeline

Given these challenges, it’s easy to dismiss AI texture generators as a gimmick. But that’s a mistake. The key isn’t to expect a one-click solution. It’s to use these tools for what they’re good at: creating incredible starting points.

You wouldn’t expect a rough sketch to be the final painting. Treat AI-generated textures the same way as a powerful base for your expertise.

From prompt to polish: Using AI as a creative starting point

Think of the AI as a hyper-fast junior artist. It can flood you with ideas and give you a rich foundation to build upon, saving you from the blank canvas problem.

Here’s a practical workflow that works:

1. Generate the albedo first: Use the AI to generate your base color map. Prompt for the overall look and feel: "cracked desert earth," "stylized painted wood," etc. Don't worry about perfect realism yet.

2. Deconstruct and rebuild: Take that generated albedo into a tool like Texturly or Photoshop. This is your base layer. From here, you’ll create the other PBR maps yourself. The AI has done the creative heavy lifting of establishing the color palette and pattern.

3. Hand-paint or procedurally generate masks: This is where you add the logic the AI missed. Use generators, curvatures, and AO bakes to create masks for wear, grime, and moisture. Paint in detail by hand to guide the eye and tell a story with the material. You’re layering your human understanding of context on top of the AI's raw output.

4. Combine with your library: Blend the AI-generated texture with your existing, trusted procedural textures. Use the AI to create a unique organic pattern, then layer it with a high-quality procedural noise for fine detail.

This approach gives you the best of both worlds: the speed and novelty of AI, and the control and quality of a traditional workflow.

Aligning with PBR standards: A practical checklist

An AI-generated texture is rarely PBR-ready out of the box. Before it ever touches your engine, it needs to be validated. You're the gatekeeper for quality and consistency.

Here’s a simple checklist to run through:

1. Deblur and sharpen: AI outputs can be soft. Run a sharpening filter to bring back crisp details, but watch out for halos and artifacts.

2. Remove baked-in lighting: This is non-negotiable. Use equalization tools or gradient maps to flatten the albedo. Your color map should contain only color, no light or shadow information. Check for highlights and dark spots that indicate baked-in lighting.

3. Validate albedo values: Ensure your albedo map stays within physically correct brightness ranges for its material type. Nothing in the real world is pure black or pure white. Clamp the values to a safe range (e.g., between 30-240 sRGB for most non-metals) to ensure it reacts predictably in-engine.

4. Author roughness and metallic maps: Never trust an AI-generated roughness map without scrutiny. The AI doesn’t understand how surface finish relates to material function. Create your own by converting the albedo to grayscale and aggressively tweaking the levels and contrast. Paint in variations, smudges, wet spots, and polished edges to make the material feel real. Metallic maps should almost always be binary: 0 for non-metals, 1 for raw metals.

By following these steps, you transform a flawed but interesting image into a reliable, production-ready asset. You’re not replacing artistry; you’re focusing it where it matters most.

Your turn: Making AI the co-pilot, not the artist

So, after unpacking all the frustrating "almosts" and technical hurdles, what’s the final verdict on AI texture generators?

They aren’t the one-click magic button we were sold. But thinking of them as a failure is the wrong move. The real takeaway is a shift in perspective. Instead of seeing a flawed artist, see a hyper-fast, slightly clueless but very imaginative junior assistant.

This tool won't replace your expertise. It can't. It doesn’t understand the why behind wear and tear, the physics of light on a surface, or the story a material tells. That’s still your job, and frankly, it’s the most important part.

The real opportunity here isn't to automate your role away, but to automate the most tedious part of it: the blank canvas. Use AI to flood your screen with ideas, to generate unique starting points, and to get that initial 70% done in seconds. Then, you step in. You provide the context, the logic, and the polish that transforms a cool image into a production-ready asset.

So the question to ask isn't "Should we use AI texture tools?" Instead, ask: "How can we use these tools to spend less time on ideation and more time on the final, crucial details that sell the world we're building?"

You’ve got the eye. You’ve got the technical skill. Now you just have a new, powerful, if a bit quirky, tool in your kit. Go make it work for you.

Max Calder

Max Calder is a creative technologist at Texturly. He specializes in material workflows, lighting, and rendering, but what drives him is enhancing creative workflows using technology. Whether he's writing about shader logic or exploring the art behind great textures, Max brings a thoughtful, hands-on perspective shaped by years in the industry. His favorite kind of learning? Collaborative, curious, and always rooted in real-world projects.

Latest Blogs

Beyond the Spec Sheet: A Tactile Guide to Plastic Texture Compari...

Product rendering

Texture creation

Max Calder

Dec 15, 2025

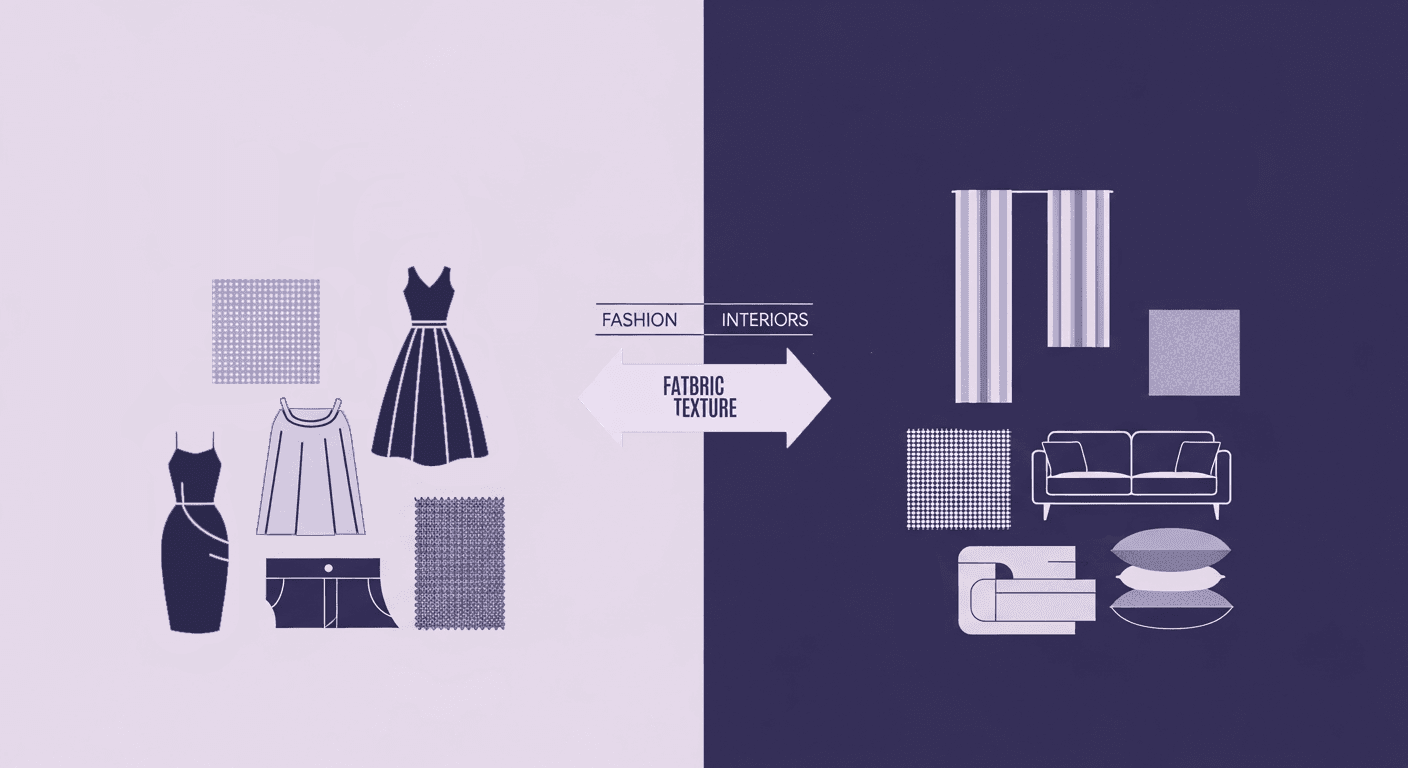

How Fabric Texture Shapes Design Strategy in Fashion and Interior...

Fabric textures

3D textures

Mira Kapoor

Dec 10, 2025

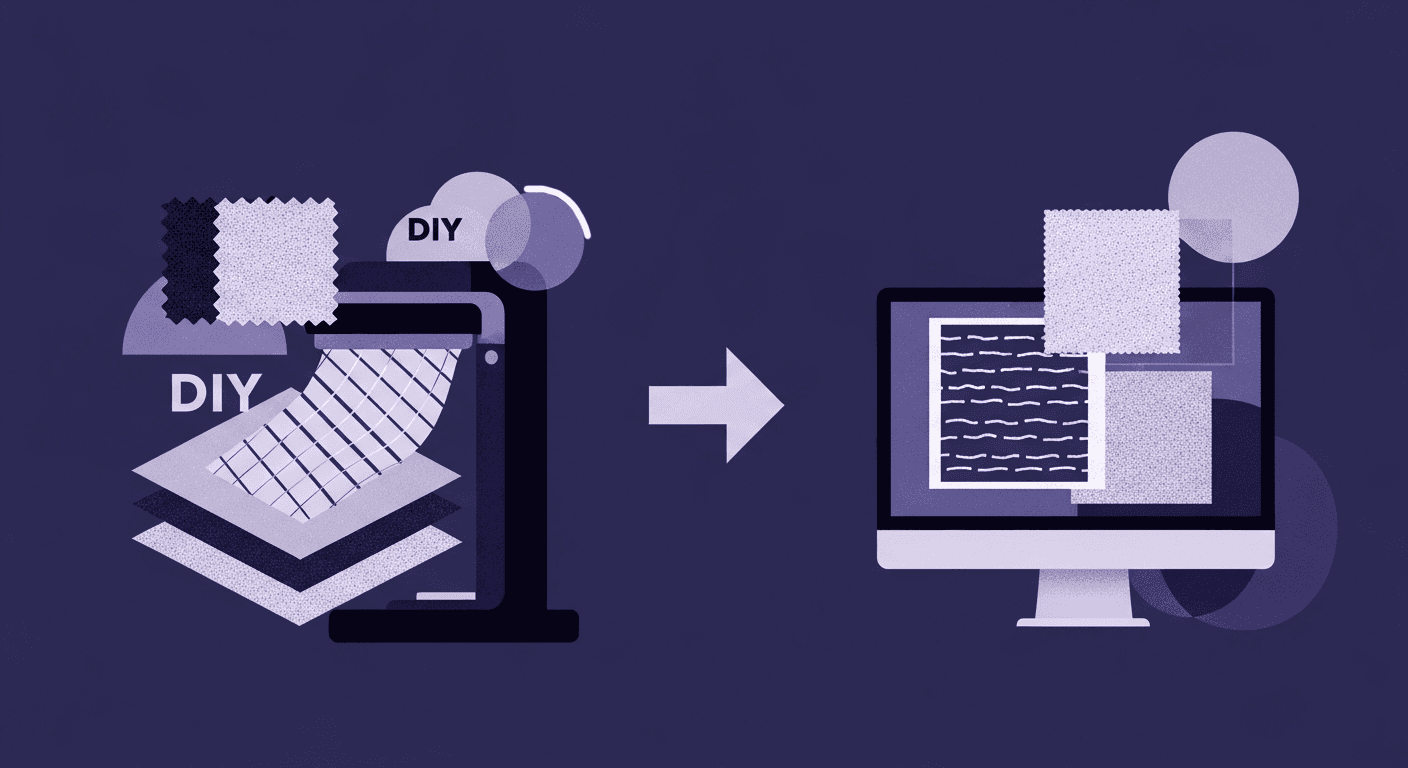

DIY Textile Texture Techniques That Make Digital Designs Come Ali...

Fabric textures

Texture creation

Max Calder

Dec 8, 2025